|

| | Thread Tools |

| | #1 | |

| [M] Reviewer Join Date: Nov 2004 Location: Waregem

Posts: 6,466

| With the performance scaling article in the back of my mind, I already expressed some thoughts regarding the purpose of the Uncore (IMC really) within Intel's marketing scheme. Following thread is a worklog to find out more about the interaction between cpu, memory and uncore frequency. Let me start by quoting myself in an earlier forum post in which I expressed my first thoughts regarding the Uncore purpose. Excerpt from forum post: Quote:

Also, it seems that the i5 7xx series only have memory ratios upto 2:10 (or 5x), whereas the i7 8xx series have ratios upto 2x12 (6x) ... to feed the 8 threads which are present on the 8xx, but not on the 7xx? In any case: for more multipliers, you need to pay more. Coincidence or marketing strategy? In any case, if it's indeed just a marketing tool, and there's no technical limitation regarding Uncore/memory frequency, why has no motherboard manufacturer been trying to figure how to 'crack' the limitation? I mean: most of the performance enthousiasts are ignorant when it comes to finding the right balance between frequency and timings; 90% just applies the "more equals better"-rule and buys $350 2000CL7 memory kits only to find out their CPU isn't capable of running 4GHz uncore on air cooling. Having a motherboard that allows users to downclock the uncore would be a smart move marketing-wise. | |

| |

| | #2 | ||||||

| [M] Reviewer Join Date: Nov 2004 Location: Waregem

Posts: 6,466

| I came into contact with MS, owner of the famous 'lostcircuits' website which deals with technology much more in-depth than we do. Although the following remarks are posted under my nickname here on this forum, all the credit for these insights go to 'MS'! Link to discussion: http://www.lostcircuits.com/forum/vi...php?f=3&t=2609 I will try to simplify the concepts for those who need more explanation; drawing some graphs as we speak. -------------------------------------------------------------------- Quote:

Off all the different features on the architecture, that particular interface is one of the less likely items to be changed, whereas the memory controllers are just modular blocks that can be thrown in or deleted at lib. Quote:

Quote:

Quote:

Quote:

Quote:

I'll check on the interconnect width again, I might be wrong but I think I remember having this discussion with some Intel folks. | ||||||

| |

| | #3 | ||

| [M] Reviewer Join Date: Nov 2004 Location: Waregem

Posts: 6,466

| Quote:

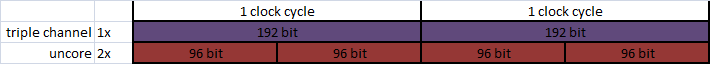

Now, MS seems to repeat a few times that the 96-bit interface between core and uncore is an assumption he makes. Although I'm trailing him by miles when it comes to technical knowledge, I can imagine why he's making this assumption as it makes perfect sense. Look at the following graph:  IF the uncore would be running 1:1 with the memory frequency, the maximum theoretical data throughput would be 96 bit/cycle, which forms a problem as the memory data path equals 192 bit/cycle. So, in order to be able to address the complete memory data path in one clock cycle, we have to increase the Uncore frequency. In this case, it's quite simple:  Doubling the Uncore frequency makes it possible to handle twice the data in one cycle; so instead of 96 bits we now have 96 x 2 = 196 bit per clock cycle, which matches the memory data path. It's a perfect match. By the way, it's not possible to have the uncore and memory frequency run at 1:1 because ... well, MS uses a beautiful metaphore to explain: Quote:

For dual channel configurations, which are 128 bit wide, it's a more complicated problem since there's no easy fix as with triple channel (just x2). Basicly, in a perfect world, increasing the uncore frequency by 1,33 would do the trick as instead of 96 bit per clock cycle you would then be able to address 96 x 1,33 = 128 bit in one clock cycle, which is the full dual channel bandwidth. The problem, however, is that this would make the register management quite difficult as you can see on the graph underneath:  Basicly, the uncore register would have to be aligned at the 1/3 and 2/3 mark. Or, put differently, the system has to make note where the first register output ends (1/3 of the second 96 bit series) and the second output ends (2/3 of the third 96 bit series). It's not technically impossible, but far from an elegant (= efficient) solution. Much easier is to increase the frequency by 1,5: the only alignment is the one at 1/2, which is just splitting up into two pieces. Any questions? Last edited by Massman : 12th August 2009 at 14:41. | ||

| |

| | #4 |

| [M] Reviewer Join Date: Nov 2004 Location: Waregem

Posts: 6,466

| I put together a couple of charts regarding the performance scaling of CPU/MEM/UNC using Lavalys Everest and Pifast (don't shoot me , just wanted to keep the testing relatively short). Since Core i7 pretty much stands for 'multiplier-overclocking' I used simple values to test scaling:  Here are the results of the 'doubling in frequency'  6/12 stands for: 6x memory, 12x uncore ratio. Same rule for the two others.  12/24 stands for 12x cpu, 24x uncore ratio.  12/6 stands for: 12x cpu, 6x memory ratio. Haven't really had the time to fully analyse the charts, though. Above charts are the direct performance scaling results; afterwards, I also charted the indirect results (basicly comparing the effect of doubling a second frequency; e.g.: double UNC freq when CPU freq has been doubled).  CPU -> UNC = When the CPU frequency has been doubled, what is the effect of doubling the uncore frequency as well. This chart is basicly a representation of the previous three ones. To end with, I also compared the platform scaling (= increasing the CPU/MEM/UNC frequency at once). First row is the percentual increase going from (cpu/mem/unc) 12/6/12 to 24/6/24 and the second row is going from 133 to 167MHz BCLK (4G/1G/4G). No chart, but it's quite easy to grasp the significance of the raw data.  Now, the interesting part would be to see how this changes in dual and single channel configurations. Something for later this week. |

| |

| | #5 | |

| [M] Reviewer Join Date: Nov 2004 Location: Waregem

Posts: 6,466

| Okay, I'm starting to understand where I went off track. I was talking about uncore-to-dram bus, while in fact I had to talk about cpu-to-imc bus. Of course, each of the imc-to-dram bus widths is 64-bit, totalling 192-bit in triple channel and 128-bit in dual channel. The cpu-to-imc bus width is 96-bit; in triple channel configuration this means 32-bit per imc (x 3), in dual channel configuration this means 48-bit per imc (x 2). To acquire all data coming from the imc (192-bit in total), the cpu-to-imc clock frequency has to be equal to or higher than 2 x DRAM frequency as ... well, 96 x 2 = 196. So, 1 dram clock, 2 imc clocks to transfer data from dram to cpu. In dual channel configurations, the situation differs a bit. The imc-to-dram bus widths are still 64-bit, but the total is only 128-bit. As mentioned already, the cpu-to-imc bus width remains 96-bits, split up in 2 times 48-bit coming from both memory controllers. As you can see, each uncore clock, 2/3rd of the memory transfer has been completed, which allows the cpu-to-imc clock to be decreased. The most elegant solution is to make the cpu-to-imc clock 1,5x dram frequency; for each dram clock, 3/2 transfer can be performed, or, 48 x 3/2 = 64 bit. Maybe more simple: for each 2 dram clocks, 3 uncore clocks complete the memory transfer. Intel always claimed to made the memory acces as efficient as possible, which can be seen in the 1366-i7 design. No clocks or bus width is lost. As memory and imc multipliers are unlocked, it's a big more difficult to understand this, but with the locked imc multipliers on 1156, this IS can be seen perfectly. LGA1156 Core i7 series: maximum imc multiplier = 2:12. As the memory multiplier needs to be equal to or higher than 1,5x dram frequency, the lowest possible imc multiplier has to be 18x (12 /2 x3). When using the 2:12 memory multiplier, you are as efficient as you can be. LGA1156 Core i5 series: maximum memory multiplier = 2:10. As the multiplier needs to be equal to or higher than 1,5x dram frequency, the lowest possible imc multiplier has to be 15x (10 /2 x3). At 16x, you have more cpu-to-imc bus width than there's needed for transfer. So, to answer my own questions: Quote:

2) Yes, memory transfer per energy is lower on Core i5. Time to read up on Gulftown reports and see how all this is again different with Gulftown  . . | |

| |

| | #6 |

| [M] Reviewer Join Date: Nov 2004 Location: Waregem

Posts: 6,466

| |

| |

| | #7 |

| Madshrimp Join Date: May 2002 Location: 7090/Belgium

Posts: 79,021

| this should be put in a live article at the site

__________________  |

| |

| | #8 |

| [M] Reviewer Join Date: Nov 2004 Location: Waregem

Posts: 6,466

| And break NDA while we're at it?  |

| |

| | #9 |

| Madshrimp Join Date: May 2002 Location: 7090/Belgium

Posts: 79,021

| up until post #6 there's no NDA to speak off... ? and even #6 is questionable as those screenies don't prove anything performance wise

__________________  |

| |

| | #10 |

| [M] Reviewer Join Date: Nov 2004 Location: Waregem

Posts: 6,466

| Correct. |

| |

|

Similar Threads

Similar Threads | ||||

| Thread | Thread Starter | Forum | Replies | Last Post |

| Official Microsoft Windows 7 USB/DVD Download Tool | jmke | WebNews | 0 | 12th December 2009 11:28 |

| Office 2010 Technical Preview screenshots | jmke | WebNews | 0 | 18th May 2009 20:17 |

| nVidia Mobility Modder Tool goes public! | jmke | WebNews | 2 | 14th August 2008 16:49 |

| AMD GPU Clock Tool 0.9.8 with Radeon HD 4800 series support | jmke | WebNews | 0 | 7th July 2008 15:54 |

| Nvidia spent 4 to 5 million on Crysis marketing | jmke | WebNews | 0 | 14th February 2008 14:09 |

| Futuremark Announces 3D Content Creation Tool Chain for OpenGL ES 2.0 | jmke | WebNews | 0 | 8th August 2007 15:54 |

| New Microsoft WGA Tool tries to call home | jmke | WebNews | 0 | 7th March 2007 15:10 |

| ATI Tool vs. CCC | Volt | Hardware Overclocking and Case Modding | 1 | 28th September 2006 10:49 |

| ATI produce tool to increase Doom3 scores 'up to 35%' with AA enabled | jmke | WebNews | 0 | 15th October 2005 01:44 |

| registry monitoring tool | kristos | General Madness - System Building Advice | 2 | 18th February 2005 23:59 |

| Thread Tools | |

| |