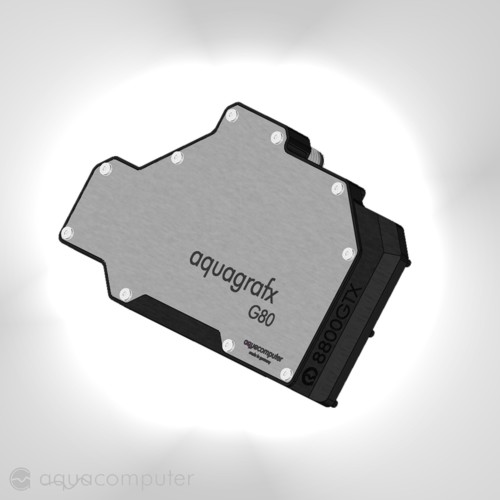

Basics of G80 overclocking explainedFor overclocking our Geforce 8800 card you will need RivaTuner, once launched you will see this appearing on your screen:

We'll first show you how you can monitor the different clocks used with the G80 architecture. GPU, memory and shader clock can be monitored as well as the GPU temperature by following the next directions:

We are currently testing a Geforce 8800 GTS. This cards has its core/rop domain clocked at 500MHz, 1200MHz on the shaders and 1600MHz on the memory. Those are the round numbers NVIDIA provided us with at the launch of their 8800 gamma, though once we launched RivaTuner we saw that the real-time clock speed differs a bit from what NVIDIA officially claimed. Here is what you will see with an 8800 GTS:

If you scroll down you'll see the temp chart, temperatures around 80°C is normal when the GPU is being heavy loaded:

RivaTuner can log even more. As you see fan speed is auto controlled. Once the temperature exceeds a certain level the fan controller will adjust the power-on duty cycle of the fan and so force it to do more 'runs per minute', or provide our G80 GPU with more cooling.

Now that we know how our card can be logged, let me show you how you can alter the clock speeds:

A new menu will pop-up:

Through RivaTuner we can adjust the clockpeed of the GPU and memory clock. The best way to find your maximum stable overclock is by increasing the clockspeed of your card by 5~10MHz at a time, remember that increasing clockspeed will make your VGA card less stable which might end up in a system crash.

RivaTuner has a small build-in test where you always have to get through once you set a new clock frequency, we recommend to use more in-dept stress test software like Futuremark's 3D Mark. It's always good to have an eye on the card's temperature. Like we said, 80°C is within normal range, but once the temperatures exceeds a certain level performance will start to lower, your card will show graphical anomalies or your system might hang. Make sure you have plenty of airflow going over the G80 card.

Now that you know the basic steps to overclock your Geforce 8800 we can continue to dive deeper inside our article ->

. riva is always right

. riva is always right

, thank you for the informative post!

, thank you for the informative post!